Hi,

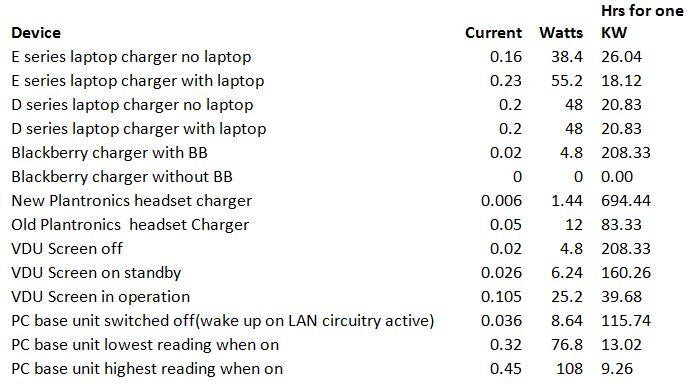

I was hoping someone on here may be able to help me. My office is on a drive to be green and energy efficient. We are looking at the impact of unplugging laptop chargers vs keeping them plugged in. From internet research we have found that our modern laptop chargers go into a standby mode when they are either unplugged from the laptop or the laptop battery is fully charged. The manufacturer state that the power draw should be lower than 0.5w. Now whilst this is still a power saving, it isn't really that big even when you multiply it by the number of desks on site.

One of our team then came on site with a FLUKE multimeter and found that the power supplies were actually drawing ~35w of power despite being unplugged from the laptop. His view was that the manufacturers tests do not state at what phase they were testing at and the difference is a result of capacitive load being different to inductive load.

I am no expert and despite researching the above am still unclear whether we are saving the world in a large way or only a small way by unplugging the devices. I know that the supplies are completely cold to the touch which in my basic way makes me think that the real energy they are using is fairly low.

Could someone enlighten me? Does a high capacitive load still use up a lot of energy when the inductive load is so small?

Thanks for your help, I am really looking forward to being educated

I was hoping someone on here may be able to help me. My office is on a drive to be green and energy efficient. We are looking at the impact of unplugging laptop chargers vs keeping them plugged in. From internet research we have found that our modern laptop chargers go into a standby mode when they are either unplugged from the laptop or the laptop battery is fully charged. The manufacturer state that the power draw should be lower than 0.5w. Now whilst this is still a power saving, it isn't really that big even when you multiply it by the number of desks on site.

One of our team then came on site with a FLUKE multimeter and found that the power supplies were actually drawing ~35w of power despite being unplugged from the laptop. His view was that the manufacturers tests do not state at what phase they were testing at and the difference is a result of capacitive load being different to inductive load.

I am no expert and despite researching the above am still unclear whether we are saving the world in a large way or only a small way by unplugging the devices. I know that the supplies are completely cold to the touch which in my basic way makes me think that the real energy they are using is fairly low.

Could someone enlighten me? Does a high capacitive load still use up a lot of energy when the inductive load is so small?

Thanks for your help, I am really looking forward to being educated